IAC24: Responsible AI is an IA Skillset

In this talk for Information Architecture Conference 2024, I made the case for information architects being the adults in the rooms where AI-enabled experiences are designed and built.

Hype may come and go, but artificial intelligence is not going away. You will work on an AI experience during your career, regardless of your job title.

AI experiences require programmers and designers to make unprecedented ethical decisions. Instead of designing a knowable set of content and interactions, AI experiences require us to design for probabilities and potential risks, with limited control over outcomes.

Responsible AI is the process of thinking before you build. As an information architect, you already have what it takes to be a steward of responsible AI.

Slides and transcript

Today I’m going to tell you a story.

It begins with a happy information architect (me, hello!) in her little information architect castle. I lived a life that many information architects dream of: designing navigation, debating taxonomy terms, and making cute little content models for my cute little 20m page website. Until January of 2023.

Suddenly, Sam Altman is on every magazine cover. OpenAI is the new Google, and Google is dead, or will be at any moment, if you believe tech bro prophecy. In 3 weeks, everyone we know will be rebranding themselves on LinkedIn AI Experts. Soon, emails from leadership start flopping into my inbox like spawning salmon heaving themselves upstream in September. “What are we doing with AI” “Where is AI on the roadmap” “Let’s fix it with AI”

At this point I pace my home office, muttering under my breath between sips of tepid coffee. In a world where I already suffer fools on a daily basis, now I have to suffer the AI HYPE CYCLE! I’m not a joiner, but is that why I’m so conflicted? Or is there something more to these instincts, every fiber of my being screaming that AI is not the panacea that the tech bros prophesize? As I pace, I list off the problems on my fingers:

There are profound ethical implications of AI development and governance on a global level that I’m not even addressing in this story.

AI uses an intense amount of energy to operate. Common sense tells me this is not great.

In the case of ChatGPT, we have a parrot that ate the internet and is regurgitating it one de-contextualized word at a time. Yet the hype cycle has pushed out room for critical thought - toxic optimism and enthusiasm has taken over common sense, and most people don’t understand what the systems are actually capable of (or incapable of)!

There are no adults in the room.

We desperately need critical, cynical, systems thinkers who also care about humans in the rooms where AI experiences are being designed and built.

Then one gloomy day in late January, my quest, my fate, arrives via email. I’m tapped to join a chaotic, extremely enthusiastic band of technologists who have been given a challenge: Ship an AI-feature on Microsoft Learn, and get it done in 12 weeks. (At Microsoft, we have this cultural quirk that we have arbitrary shipping deadlines enforced by developer conferences where we present new shiny things accompanied by fireworks).

Suddenly, my academic concerns are not so theoretical, they are of-the-moment concerns. My engineers and PMs are chasing feature ideas in a hundred different directions, chasing that ChatGPT fever dream. Designers are making shit up as they go. There I am, watching this unfold…

…wildly unimpressed. As an information architect, it is my job to be un-impressed by chaos and ambiguity. It’s how we maintain the stamina necessary to lead others through it and make sense of it all. That’s easier to do when you know a little about the domain, and frankly, I knew very little about AI. Well, the only way out is through! My first challenge: Figure out what’s so different about designing for AI.

There’s a lot that’s different, and that’s a whole talk of its own. But the thing I want to make clear for today is this fundamental difference between traditional, deterministic programming, and machine learning probabilistic programming.

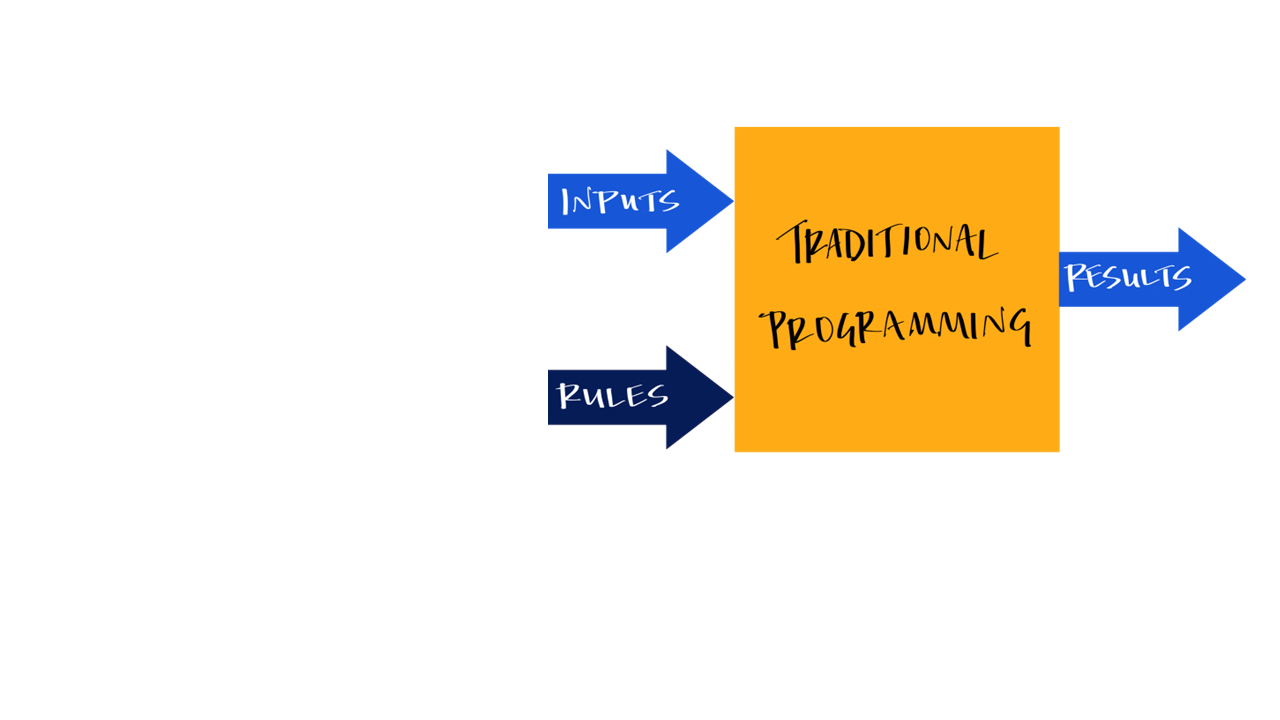

Our UX design muscles were built on traditional (deterministic) programming, assuming there are known rules that provide predictable outputs.

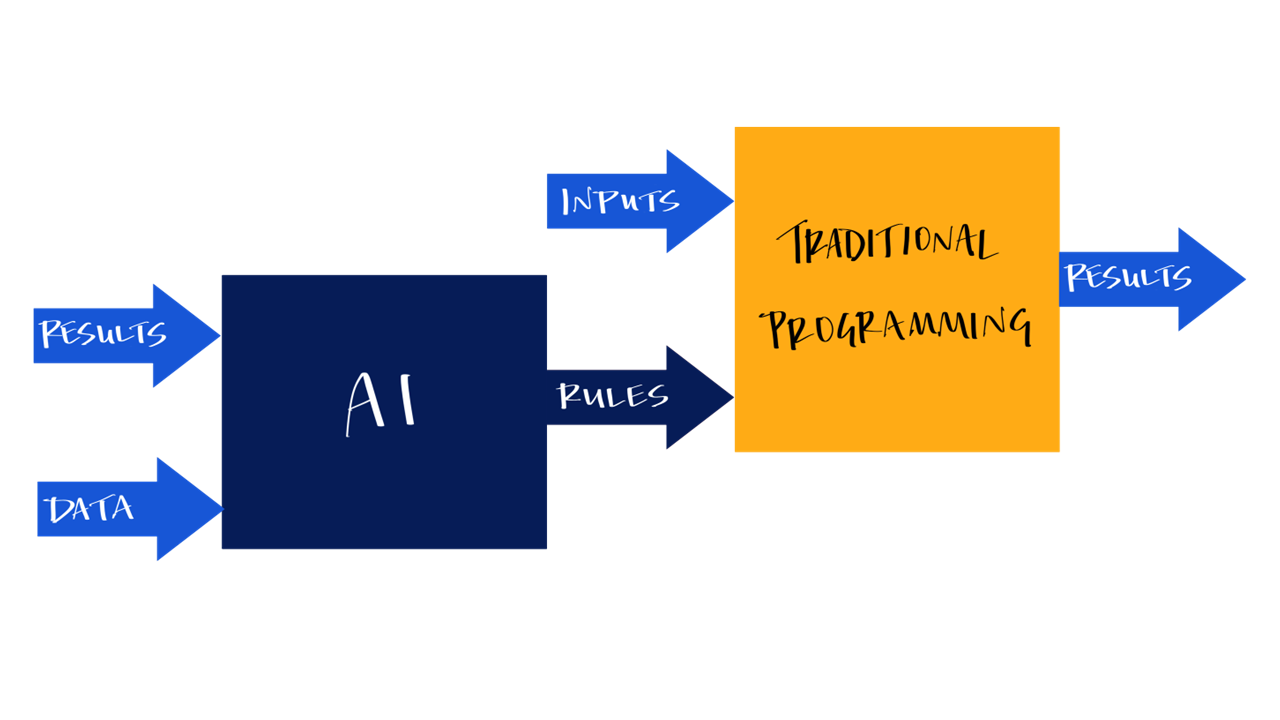

Artificial intelligence (machine learning) uses probabilistic programming. This starts with inputs that are as observed “out in the world” (really messy, problematic data usually — this is ChatGPT “eating the internet” — and generates rules from that by identifying patterns. Then that rule gets fed into some interface you designed and is used to generate output: an answer, an action, a decision of some kind.

Many AI systems are black boxes, and we don’t know exactly what patterns these systems are deducing. They can be wrong! We need to design the interface so that people can perform error analysis, or recover, or be protected, when the system they are using fails. Because it will fail at some point.

To design for AI, our design process needs new guardrails, heuristics, and patterns, since we can’t actually know what is going to happen in an AI-driven interaction - we can only guess and steer and put up lots of bumpers and then monitor very closely.

The other different thing, at Microsoft at least, is that now we have a legal team requiring us to vet our AI features against a set of standards, and they’re calling this “Responsible AI”. This is a level of forethought and oversight that is unheard of in my corner of Microsoft. I love it.

Responsible AI is making a process of thinking about what could go wrong, intentionally making decisions that minimize the amount of things that can go wrong, and then having a plan for what to do when things do go wrong. In therapy we call this “coping ahead”.

My story today revolves around Microsoft’s Responsible AI standard. This is not the only AI standard out there, but it’s the one I have to use, and it’s also very robust compared to other publicly available standards.

Microsoft’s RAI standard uses a set of 6 governing principles to steer technical and user experience design:

Fairness

Inclusiveness

Reliability and safety

Transparency

Privacy and security

Accountability

At a high level, ‘responsible AI’ at Microsoft means that for each of these principals, you:

List all the specific, potential ways you could hurt people within the lens of that principal (how might the system treat people unfairly – who, and in what ways? How might the system put people in danger? How might the system violate their privacy? Exclude them? Mislead them?)

Commit to the ways in which you’re going to mitigate that harm.

Commit to the ways in which you’re going to monitor the system so that if that harm occurs, you can deal with it.

Once I understood that responsible AI is the act of thinking before building, I realized…I already do this. This is Microsoft’s legal arm coming to down from Floor 7 to help me do my job. We (IAs) already do this! We’ve been training for this moment our entire careers.

We are the adults in the room.

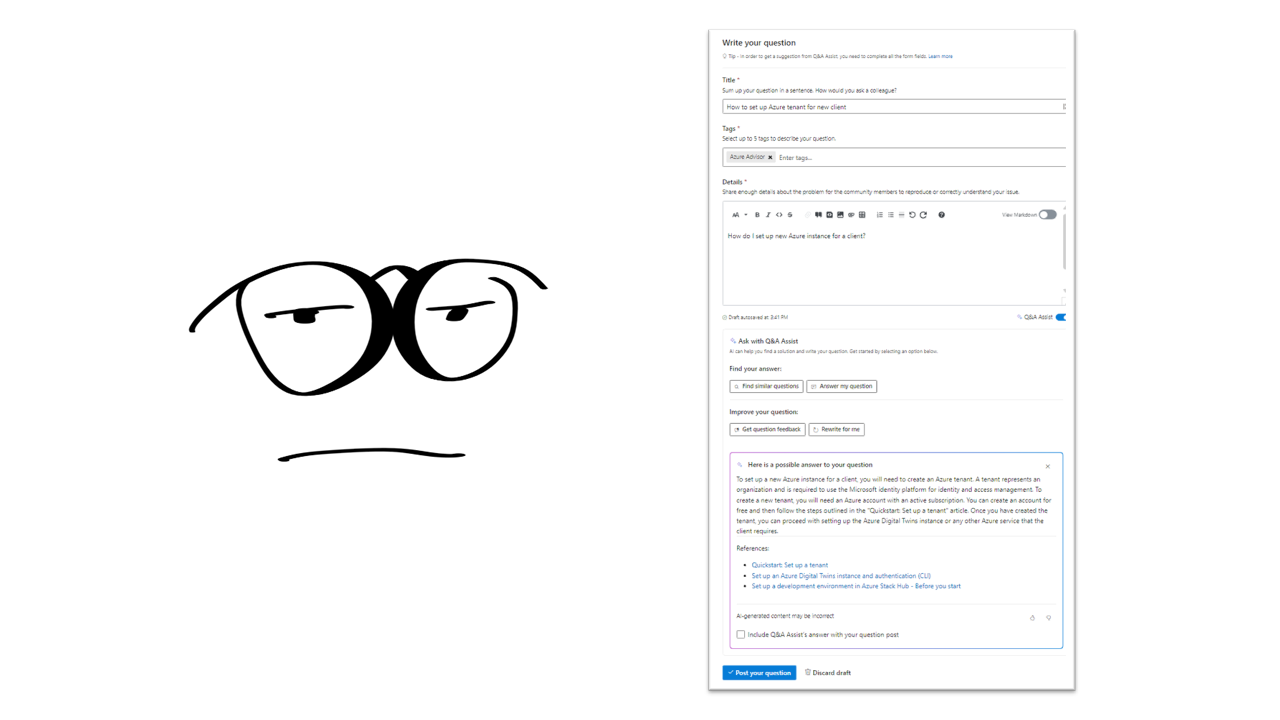

Back to February of 2023 here in Seattle. I’m meeting with a group of engineers, PMs, and designers to design and build an AI feature for Microsoft Learn. We’ve aligned on an AI use case for our website: We’ll use a large language model to answer user questions and help improve the writing of their questions on Q&A, which is our version of Stack Overflow. In order to actually ship this, we need to show that our plan is compliant with the Microsoft Responsible AI standard.

But we can’t just hand over a typical feature spec to the review board. No, no, no. We have to complete the Impact Assessment. The operational cornerstone of the RAI compliance process at Microsoft, it is a massive and cumbersome worksheet written by lawyers, and IMHO one of the best things that has happened to product management & design at Microsoft. This requires us to align on intended use cases, get really clear on how it “ought to work”, admit who we’re helping and who we’re hurting, list all the ways it could go wrong and what we’re going to do about it, define a data governance plan, and more. It drags us through hundreds of criteria and requirements. The decisions that come out of this activity serve as our compass as we move into technical design and UI design.

I get together with the feature team and we start filling out this impact assessment. You can see here that the act of doing this is the very first step in assuring we’re meeting “accountability” goals.

The principle of Accountability is about thinking critically about whether AI is the right solution for the problem at hand, getting really clear on the purpose of the system you’re building, defining your data governance plan, and figuring out details around oversight and control.

As we’re describing how things are going to work something pretty predictable starts happening. It becomes clear that:

We don’t agree on the purpose of the system (folks are proposing diverging use cases that don’t have real world value. “The AI can tell you if your question is good.”)

We don’t share a mental model for how the feature ought to work (folks are proposing absolutely incoherent user flows that favor the system constraints over the user needs. “the AI can provide generic suggestions for how to write a better question in general, but the user will have to edit their own question based on generic feedback”)

We don’t all understand the limitations of the AI (folks are proposing ways it ought to work that are not technically possible. “The AI knows how all of our products work together.”)

So I did here what I would do in any conversation:

Identify assumptions and call them out, get folks to acknowledge and unpack them.

Identify points where we have to make decisions, and identify the implications of those decisions.

Help stakeholders get to a shared understanding.

This doesn’t sound so different from how I’m sure you all work every day. You already do this. In this case, we’re just doing it in the name of responsible AI.

There’s this other part of the impact assessment that you have to do under ‘Accountability’, and that is to list the potential benefits and potential harms for every stakeholder of the system. This includes internal stakeholders and end users of the system, like customers.

When my colleagues took a first pass at filling this out, they did that thing that people do when they’re emotionally invested in shipping something, they were great at filling out the potential benefits and left the potential harms column empty. “People will just be asking technical questions, there’s not much that can go wrong.”

Me: “Ok cool but what if someone asks what the best solution is for moving to the cloud and the system answers “AWS” (a competitor)?”

Them: yeah that would be bad.

“What if someone asked for instructions on how to hack into someone’s Azure tenant and the system gave them an answer?” (write it down!)

“What if the IT admin of a major hospital system asks for emergency support during a high-threat security breach that has shut down access to patient records and the system responds “IDK did you try restarting?” (write it down!)

I can keep going. But you get the idea.

There was a palpable sense of fear when I started asking those questions. It felt to me like people were afraid to think about what could go wrong. Maybe it felt like manifesting – if I write it down, it could go wrong. Or maybe it felt like liability – if I write it down and it happens, I’m on the hook. Or maybe it just felt wrong to go against the AI hype cycle.

But you know who is not afraid to be wrong, to ask questions, to go against hype cycles? Information architects! So I kept prodding, kept pushing..

Eventually we made a big list all those harms, argued over them, and then later in the impact assessment, wrote down what we’re going to do to mitigate them.

What I’m doing in that conversation is thinking critically, seeing the downstream and upstream implications of seemingly benign decisions, and being brave.

IAs do this every day. You already do this. In this case, we’re just doing it in the name of responsible AI.

Most of the work of doing responsible AI is in the early decision making, before an interface or a mockup is even a glint in the designer’s eye. But there are obviously interface design implications here, too, since users typically interact with AI in some kind of interface.

At the same time we’re doing this impact assessment, designers are working in parallel to design the interface for the feature. Remember, it’s early 2023; we don’t have shared patterns for AI interactions yet. We have some central guidelines and some examples of teams who have done it well, but there’s not a pattern library or a component library for AI interactions at this point. The designers are working in pure chaos at this point, mostly making things up as they go.

Part of my responsibility to this team is to review the interface against the promises we made in the impact assessment, but more generally I also make sure the interfaces honor basic principles of responsible human-AI interactions. Luckily, we DO have some central guidelines for human-ai interactions at this point. It’s a thing called the HAX toolkit – which is public, go check it out. I used the HAX guidelines as a rubric, I reviewed the interface designs against that rubric, and I suggested changes and specified requirements that needed to be met. We iterated the UI until it met all of the requirements.

I bet you do this all the time. IA’s operate in a world of heuristics and patterns. In this case, we’re just doing it in the name of responsible AI.

Resource: Microsoft HAX Toolkit

So we finish the impact assessment, getting through all the questions for all 6 principles. We submit it to the compliance board for review.

Then…we get approval and we SPRINT to build this thing (get it? Because we pretend we’re agile). May of 2023, we ship the new experience just in time for our big shiny conference.

We made it to the promised land! Yay! And the promised land was so delightfully…..

BORING! Delightfully boring! …This is a good thing.

Users got answers. Sometimes the answer came from AI system directly, sometimes the answer came from a person who was able to answer more quickly because the AI helped to write a clearer question.

Our system has not yet fallen in love with any users.

What I am more delighted by is : We were one of the first teams at Microsoft to ship a responsible AI feature to general availability, and one of the teams most engaged with the compliance process.

We take that set of standards very seriously.

I don’t think that it’s a coincidence that we’re also the only team to have dedicated information architects.

This story cannot end happily ever after, with me, your hero, riding off into the sunset. As long as that AI system is deployed, there is no “done”, we never know what could happen.

So this is where I beseech you to be the adult in your next meeting about AI:

You have the skills to do it.

You have the tools to do it.

You have a viewpoint that matters.

We need you.

You are the adult in the room.

Source materials and resources

People & institutions to check out

Distributed AI Research Institute (Distributed AI Research Institute | DAIR (dair-institute.org))

Yoshua Bengio and Max Tegmark of the Future of Life Institute

Dr. Joy Buolamwini and Tawana Petty from the Algorithmic Justice League

Dr. Safiya Noble Safiya Umoja Noble, Ph.D. (safiyaunoble.com)